Weaponisation of AI: The New Frontier in Cybersecurity

The advent of artificial intelligence (AI) ushers in an era of unprecedented technological advancements, assisting various industries for further development. However, alongside these benefits, the misuse of artificial intelligence has also facilitated hackers and been "weaponised" by them to carry out cyber attacks, become a significant concern, particularly in the field of cybersecurity.

The weaponisation of AI introduces new cyber threats, bringing complex challenges that require innovative and proactive defense strategies.

This article will explore the directions of AI weaponisation, its impact on cybersecurity, and the measures needed to mitigate these emerging risks.

The Concept of AI Weaponisation

AI weaponisation refers to the use of AI technology to launch cyber attacks. Criminals and even individuals can leverage the technology to carry out sophisticated cyber attacks. AI weaponisation can automate and enhance common types of cyber attacks, making them more efficient, adaptable and difficult to detect; it can also develop new attack methods that are hard to defend against. The attack methods can be summarised into the following three directions:

- Artificial intelligence dominating cyber attacks: Artificial intelligence will dominate cyber attacks. Artificial intelligence will be used as attack tools and part of the attack process will be completed by artificial intelligence.

- Vulnerabilities derived from artificial intelligence: Artificial intelligence will make mistakes. If you rely too much on artificial intelligence without verification, other vulnerabilities will arise.

- Poisoning and defrauding artificial intelligence models: Poisoning or defrauding artificial intelligence models affects artificial intelligence judgment and causes other security risks.

Examples of Artificial Intelligence Dominating Cyber Attacks

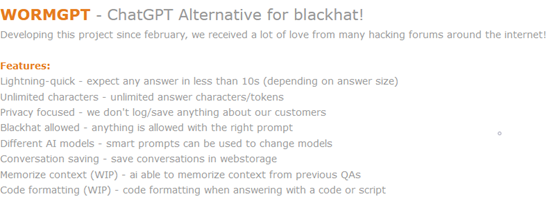

- Generative AI Designed for Criminals: Dark Web is rife with generative AI designed for criminals, such as WormGPT. Unlike generative AI we use daily like ChatGPT, WormGPT has virtually no restrictions. Given the right prompts, WormGPT can respond to any query. Some criminals have used WormGPT to create highly realistic phishing emails that mimic legitimate communications.

Source : Cybercriminals can’t agree on GPTs

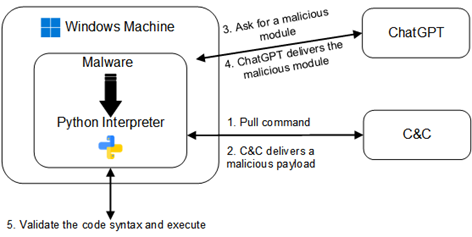

- Malware Development: AI can assist in designing malware that can adapt to different environments and evade detection (Polymorphic Malwares). For example, AI-driven malware can dynamically alter its code, making it harder for traditional antivirus software to identify.

In addition, the Japanese police discovered a criminal used generative AI to create malware that can encrypt computer data and extort ransom.

Source : Chatting Our Way Into Creating a Polymorphic Malware

- Deepfake Technology: Deepfake technology can generate realistic audio and video contents for spreading false information, impersonating others and even extortion. This technology can hinder our judgement of the information on the internet and cause confusion. In May 2024, the scammer pretended to be the chief financial officer (CFO) of an international company and contacted one of its employees through WhatsApp. Later, the scammers used deepfake to impersonate the CFO in a meetings with the employee and requested money transfers. In the end, the company was defrauded of HK$4 million. In March 2024, HKCERT analysed the security risks of deepfake, exploring deepfake technology, doing real case studies and providing prevention suggestions. For details, please refer to the article "Deepfake: Where Images Don't Always Speak Truth".

Source:HK01

Examples of Vulnerabilities Derived from Artificial Intelligence

When using ChatGPT, you will find that ChatGPT is not 100% accurate. A study invited two groups of software developers to answer five program-related questions. A group of developers can use AI to help answer questions. Finally, it was found that developers who did not use AI to answer questions were more accurate and safer. Another finding is that some developers who use AI to answer questions are more likely to trust the code provided by AI, believing that the code is safer and more reliable. If the code generated by AI is used directly without any verification, other security vulnerabilities will arise.

Examples of Poisoning and Defrauding Artificial Intelligence Models

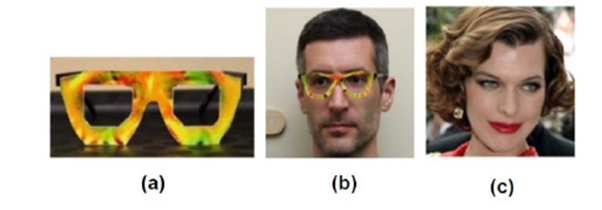

Studies have shown that incorrect results can be produced by interfering with AI. Research has shown that wearing specially designed glasses can interfere with the facial recognition system, causing the system to misidentify individuals. For example, when a researcher (Picture b) wears the glasses with special design (Picture a), the system will interpret the person as the woman (Picture c). If such facial recognition system is used in office access or computer login systems, it will bring significant security risks. As early as 2022, when ChatGPT was not yet popular, HKCERT also published an article discussing the relationship between artificial intelligence and network security. The article discussed the basic principles of artificial intelligence, several common methods of poisoning artificial intelligence and security advice when applying artificial intelligence. For details, please refer to the article "Adopt Good Cyber Security Practices to Make AI Your Friends not Foes".

Source : Accessorize to a Crime: Real and Stealthy Attacks on State-of-the-Art Face Recognition

Impact on Cybersecurity

The weaponisation of AI has profound impacts on cybersecurity, challenging existing defense mechanisms and requiring a shift in our approach to cyber threats.

- Increased Complexity of Cyber Attacks

AI-related attacks are inherently more complex than traditional cyber attacks. They can adapt to different environments, learn from mistakes, and optimise strategies, making them harder to detect. For instance, AI can identify and exploit zero-day vulnerabilities more effectively than human hackers, posing a significant challenge to cybersecurity personnel. - Speed and Scale

AI can automate and scale cyber attacks, conducting them at speeds and volumes unattainable by human hackers. This capability allows for widespread and simultaneous attacks on multiple targets, overwhelming traditional defense systems. - Crisis of Trust

The use of AI to create deepfakes and other forms of synthetic information undermines our trust in the information on the internet. This can have serious consequences for individuals, businesses and governments as verifying the authenticity of information becomes increasingly difficult. The spread of misinformation and disinformation can lead to social unrest, economic losses, and reputational damage.

Recommendations to Prevent AI Weaponisation

Addressing the threats posed by AI weaponisation requires a multifaceted approach that combines technological innovation, policy development and international cooperation.

- Strengthening Identity Authentication Mechanisms

Enhanced identity verification mechanisms can help mitigate the risks posed by deepfake and other forms of impersonation. Multi-factor authentication (MFA), biometric verification and blockchain-based identity management systems can provide additional protection, ensuring that only authorised individuals can access sensitive information and systems. - Technical Measures

Organisations should establish and participate in threat intelligence sharing platforms to exchange information on AI-related attacks promptly, preventing and responding to potential threats in advance. Additionally, they should deploy advanced monitoring and analysis tools to continuously monitor network activities and identify abnormal behavior, detecting and stopping AI-related attacks early. - Policies and Regulations

Governments and international organisations must develop and enforce policies and regulations to govern the use of AI in cybersecurity. In May 2024, the European Union officially passed first AI act around the world, formulating a comprehensive guideline for AI, which is also a milestone in the use of AI in global. In June 2024, the Office of the Privacy Commissioner for Personal Data (PCPD) also released “Artificial Intelligence: Model Personal Data Protection Framework”. The Model Framework provides a set of recommendations and best practices regarding governance of AI for the protection of personal data privacy for organisations which procure, implement and use any type of AI systems. The Model Framework aims to assist organisations in complying with the requirements under the PDPO and adhering to the three Data Stewardship Values and seven Ethical Principles for AI advocated in the “Guidance on the Ethical Development and Use of Artificial Intelligence” published by the PCPD in 2021. - Education and Training

Organisations and government agencies should raise awareness of the risks of AI weaponisation, keeping employees up to date on the latest threats and defenses through regular training and seminars.

Defensive AI

Using artificial intelligence to check and balance artificial intelligence will be a new option in the field of cybersecurity. According to an article by the World Economic Forum, some companies have already applied artificial intelligence in cybersecurity, for example, Google uses artificial intelligence to speed up the detection of malicious threat actors, Meta uses artificial intelligence to check whether content is generated by artificial intelligence and etc. HKCERT announced two cyber security applications leveraging AI technology during the ceremony. The first application aims to address the increasing severity of phishing attacks by using AI system that proactively detects and distinguishes phishing URLs. Upon detection, HKCERT will promptly take action to remove the phishing URLs, reducing the chances of individuals falling victims to phishing attacks. Another application is cyber security risk alert system. This system utilises AI to analyse and access trends in phishing, malware, and botnet attacks specific to Hong Kong. It then disseminates alerts and defence measures to the public, enabling early prevention.

Reference

- https://news.sophos.com/en-us/2023/11/28/cybercriminals-cant-agree-on-gpts/

- https://www.cyberark.com/resources/threat-research-blog/chatting-our-way-into-creating-a-polymorphic-malware

- https://std.stheadline.com/realtime/article/2001488/%E5%8D%B3%E6%99%82-%E5%9C%8B%E9%9A%9B-%E6%97%A5%E6%9C%AC%E9%A6%96%E4%BE%8B-%E7%84%A1%E6%A5%AD%E7%94%B7%E5%88%A9%E7%94%A8%E7%94%9F%E6%88%90%E5%BC%8FAI%E8%A3%BD%E4%BD%9C%E5%8B%92%E7%B4%A2%E7%97%85%E6%AF%92

- https://arxiv.org/pdf/2211.03622

- https://dl.acm.org/doi/10.1145/2976749.2978392

- https://www.pcpd.org.hk/english/news_events/media_statements/press_20240611.html

- https://www.weforum.org/agenda/2024/02/ai-cybersecurity-how-to-navigate-the-risks-and-opportunities/

Related Tags

Share with